Research

Current Students

Colin Shea-Blymyer (PhD; Ethics in AI)

Nicole Fronda (PhD; Neuro-Symbolic AI)

Charles Koll (PhD; Runtime Monitoring)

Sahir Gill (PhD, co-advised with Elaine Cozzi; Resilient and secure control architectures for UAS)

Denue Grant (MS; Self-driving behavior preferences of differently abled populations)

Richard Pelphrey (MS; Logical Signal Processing)

Khushal Brahmbhatt (MS; Fair temporal logic control)

Graduated Students

Niraj Basnet (MS; Logical Signal Processing)

Connor Kurtz (MS; Fair temporal logic control)

Claire Hekkala (Honors’ College; Logic inference)

Nathan Jewell-Carlton (Honors’ College; Parallel reachability)

Current Projects

Runtime Verification at the Edge (NSF SHF 2118179)

The environments we live in, autonomous technologies we are developing, and even our bodies, are now instrumented: limited-resource nodes collect large amounts of data in real-time to better track and explain their system’s and environment’s behavior. A 2019 Cisco study found that there are 28.5 billion networked devices and connections in the world. Within this massive ecosystem, one class of future critical applications stands out: software applications that use networked nodes to provide detection of safety risks in the system or its physical environment. Example applications that require such monitoring include fleets of autonomous vehicles, health-monitoring wearables, search-and-rescue, and climate monitoring. These applications are already transforming lives, but suffer from a lack of timely, reliable and energy-efficient tools to monitor their correct operation. The focus of this project is to provide precisely such a monitoring infrastructure. This will require overcoming several difficulties: first, the monitoring code must be automatically generated, rather than hand-written, as this reduces the likelihood of errors. The monitor must be able to deal with analog/physical signals produced by the observed phenomena, such as wave heights or temperatures. It must also deal with drifting clocks on the different nodes, which do not read the same moment in time. It must also be resilient to node crashes and malicious attacks. Finally, it must be distributed over the nodes, rather than centralized, since this is less prone to catastrophic failures.

In this project, we will be radically extending the reach of runtime monitoring to new and economically important edge applications. This will be achieved by implementing three research thrusts: (1) the first theory for distributed monitoring of continuous-time, asynchronous signals. The algorithms perform distributed optimization on the edge nodes themselves, thus eliminating the need for a central monitor. Convergence proofs establish soundness. The algorithms incorporate partial knowledge of signal dynamics, where available, to accelerate convergence. (2) A theory and algorithms for incremental monitoring, where intermediate calculation results are still usable by the application should some nodes crash. The monitors will also accommodate nodes that intentionally falsify their data. (3) A rigorous validation of the algorithms on realistic autonomous vehicles, to establish their performance within a full software stack and in the presence of real-world noise and failure conditions.

F1/10 RACECAR: Community Platforms for for Safe, Secure and Coordinated Autonomy (NSF CNS/CCRI 1925652)

F1/10 is a shared, open-sourced infrastructure for the development and validation of new approaches to autonomous perception, planning, control and coordination. This community platform will facilitate autonomous system research, education and bench-marking through the creation of a new class of high-performance autonomous racing cars, that are 1/10th the size of a real (Formula 1) car and can reach a top speed of 50mph. The goal is to enable a wide range of experimental research and education in Safe, Secure, Coordinated and Efficient Autonomy. Much of today’s research on Autonomous vehicles (AVs) is limited to experimentation on expensive commercial vehicles that require large teams with diverse skills and power-hungry platforms. Testing the limits of safety and performance on such vehicles is costly and hazardous. It is also outside the reach of most academic departments and research groups. Furthermore, little research has been devoted to the development of safety benchmarks and infrastructure for certifying autonomous systems, guaranteeing the safety of data-driven decision making, power-efficient perception and control for efficient autonomy, active coordinated safety for large vehicle fleets, and cyber-physical security of autonomous vehicles. All of these are fundamental requirements on the road to achieving the social benefits of autonomous vehicles, and autonomous systems more generally. F1/10 provides rapid prototyping hardware, software and algorithmic platforms, with full documentation and community support for research and development of future autonomous systems.

Best Engineering Practices for Autonomous Systems (FAA ASSURE)

Industry desires to automate many types of Unmanned Aerial Systems (UAS) operations to the extent possible. This research will explore automation failures that provide insights relevant to the design of automation to support UAS operations. Researchers will classify the various types of automation failures that are identified in order to better assess automation risks and areas of design that require special attention to eliminate or mitigate these risks in the operation of UAS.

Operational constraints and limitations of UAS design will also be assessed as part of the overall risk management approach defining the conditions necessary for automation to safely perform during UAS operations. Generalized design guidance and best engineering practices will be developed. Specific design guidance and recommendations will be proposed for a few key areas of UAS automation design that require special attention, to include architecture, redundancy, runtime assurance, and self-monitoring. This specific guidance will be validated through simulations and experiments. Research findings will be shared with an industry standards body.

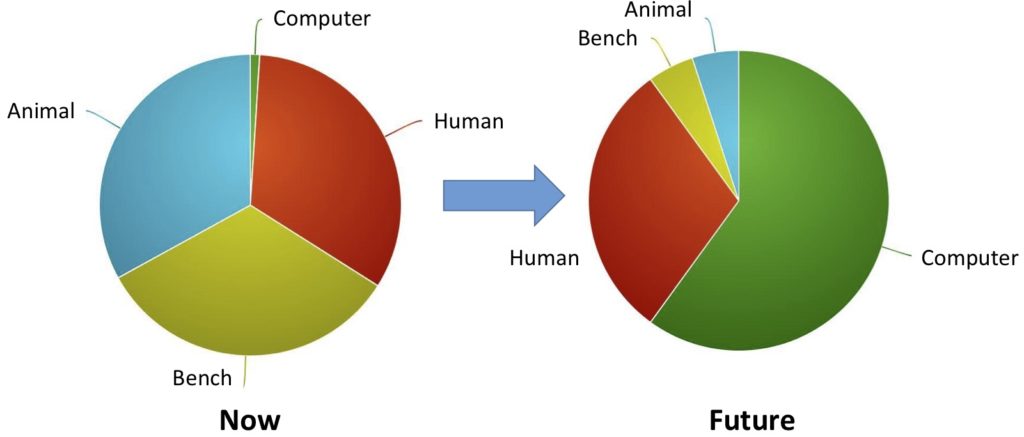

Fair Temporal Logic Control

When multiple robots share a resource, like UAVs, sharing the airspace, we intuitively do not want one of them to over-use the resource, forcing others to under-use it. This project looks at various notions of fairness and develops algorithms that maximize fairness while ensuring mission satisfaction. Without such fairness guarantees, small operators are unlikely to enter the market and economic benefits of new technologies, especially in Urban Air Mobility, are likely to be missed.

Past Projects

UAS Cybersecurity and Safety Literature Review (FAA ASSURE)

This is a systematic literature review to understand the cybersecurity risks of Unpiloted Aerial Systems (UAS), and these risks’ potential impact on UAS integration into the national airspace. The review covers the last ten years. It sets the agenda for follow-on research that might seek to better assess the ease of realizing the threats identified in this work, and consequently provide more concrete guidance on the impact of integrating UAS into the national airspace.

Robustness-maximizing Control

The video shows 8 Crazyflie quadrotors executing a Reach-Avoid maneuver by following a robustness-maximizing trajectory that guarantees correctness in continuous-time and -space. See the CCTA paper for details of (an earlier version of) this controller.

This video shows a simulation of 8 quadrotors executing 2 different missions: 4 quadrotors perform a Reach-Avoid, and 4 perform a persistent surveillance mission. In both videos, the green box is the target region while red indicates an unsafe region.

Medical Devices: Verification, Programming and Clinical Trials

The design and implementation of reliable and correct medical device software is challenging, especially considering the limitations of hardware platforms on which it must run, and the compromises that they lead to. Safety recalls of pacemakers and Implantable Cardioverter Defibrillators (ICDs) between 1990 and 2000 affected over 600,000 devices. Of these, 41% were due to firmware issues (i.e. software). Yet the need for these devices continues unabated: e.g., 600,000 pacemakers are now implanted yearly, and 120,000 defibrillators are implanted yearly in the US alone. There is a pressing need for 1) rigorous verification of correctness for these algorithms, and 2) efficient implementations that run on the limited hardware platforms. Correctness verification cannot be exhaustive (in the classical sense of formal methods) since the very notion of `correct’ is ambiguous in the medical domain, and very much patient-specific. We need an approach that combines a) statistical methods that yield population-level answers, similar to clinical trials, b) formal methods that cover cases where disease-specific models are available and their limitations are understood, and c) programming languages and paradigms that automatically yield efficient implementations.

My work tackles these three aspects and uses ICDs as the application domain of choice: even though ICDs are significantly more complex than pacemakers, I developed models of the heart and ICD that falls in a decidable class, thus opening the way to formal verification of specific operating modes of an ICD. Given a verified model, I investigate the use of streaming formalisms for the programming of ICD algorithms, which automatically yield cost-efficient implementations. And I leverage Bayesian methods to incorporate computer models in the design of population-wide clinical trials. The overall goal is to decrease the regulatory burden of developing medical devices by proving models to be as reliable in the medical field as they are in more traditional engineering fields like the automtive industry, thus paving the way to Model-Based (pre-)Clinical Trials

Drs. Liang Jackson (Electrophysiology fellow, HUP) and Ari Brooks (Director of Endocrine and Oncologic Surgery and Director of the Integrated Breast Center at HUP) on the challenges of medical devices and clinical trials, followed by an explanation of Model-Based Clinical Trials

A conversation with Dr. Sanjay Dixit, Director, Cardiac Electrophysiology Laboratories, Philadelphia VA Medical Center and Professor of Medicine at the Hospital of the University of Pennsylvania, on the use of computer simulation in Electrophysiology. This was presented at the kick-off meeting of CyberCardia, an NSF Frontiers grant